|

Adam Geitgey |

https://medium.com/@ageitgey |

Know someone who should be on this list? Tweet @scottontech

See also:

|

Adam Geitgey |

https://medium.com/@ageitgey |

Know someone who should be on this list? Tweet @scottontech

See also:

Like the weather, everybody complains about programming, but nobody does anything about it. That’s changing and like an unexpected storm the change comes from an unexpected direction: Machine Learning / Deep Learning.

I know, you are tired of hearing about Deep Learning. Who isn’t by now? But programming has been stuck in a rut for a very long time and it’s time we do something about it.

Lots of silly little programming wars continue to be fought that decide nothing. Functions vs objects; this language vs that language; this public cloud vs that public cloud vs this private cloud vs that ‘fill in the blank’; REST vs unrest; this byte level encoding vs some different one; this framework vs that framework; this methodology vs that methodology; bare metal vs containers vs VMs vs unikernels; monoliths vs microservices vs nanoservices; eventually consistent vs transactional; mutable vs immutable; DevOps vs NoOps vs SysOps; scale-up vs scale-out; centralized vs decentralized; single threaded vs massively parallel; sync vs async. And so on ad infinitum.

It’s all pretty much the same shite different day. We are just creating different ways of calling functions that we humans still have to write. The real power would be in getting a machine to write the functions. And that’s what Machine Learning can do, write functions for us. Machine Learning might just might be some different kind of shite for a different day.

Read the full article: Machine Learning Driven Programming on High Scalability

After abandoning its own GPU for supercomputers, machine learning, and video games in 2009, Intel has returned to the market with a new 72-core Xeon Phi, to compete with NVIDIA’s growing portfolio of GPUs.

The Xeon Phi ‘Knights Landing’ chip, announced at the International Supercomputing Conference in Frankfurt, Germany last week, is Intel’s most powerful and expensive chip to date and is aimed at machine learning and supercomputers, two areas where Nvidia’s GPUs have flourished.

Inside the chip there is 72-cores running at 1.5GHz, alongside 16GB of integrated stacked memory. The chip supports up to 384GB of DDR4 memory, making it immensely scalable for machine learning programs.

Read the full article: Intel readies chip to rival NVIDIA for machine learning on readwrite

| Year | Event |

| 1958 | Earliest recorded use of the term “Singularity” by mathematician Stanislaw Ulam in his tribute to John von Neumann (1903-1957) |

| 1993 | First public use of the term “Singularity” by Venor Vinge in an address to NASA entitled “The Coming Technological Singularity: How to Survive in the Post Human Era” |

| 2005 | Inaugural “Singularity Summit” hosted by the Singularity Institute for Artificial Intelligence (now MIRI) |

| 2006 | The Singularity Is Near: When Humans Transcend Biology by Ray Kurzweil is published. |

| 2009 | Wired for Thought: How the Brain is Shaping the Future of the Internet by Jeffrey Stibel is published. |

| 2012 | MIRI study “How We’re Predicting AI—or Failing To” |

| 2040 | Median value year predicted for Artificial General Intelligence from the 2012 MIRI study. |

References

This post is intended to kick off a multi-part series on building an image processing pipeline. Presented here is an outline which will be updated and modified along the way.

My motivation and the goal is to build a process that ingests photos (currently from a mobile device), sort them into multiple categories, and perform an independent task on each photo after sorting.

The secondary goal is to build a toolkit of scripts for each of these tasks that are reusable in the future.

The programming will be in Python.

Pipeline overview:

Progress so far:

Numbers one and two are easily crossed off the list. I have created a directory spy (item #3) in the past, but it needs to be reviewed and modified to work here.

Today [6/1/16] , Google’s newest machine learning project released its first piece of generated art, a 90-second piano melody created through a trained neural network, provided with just four notes up front. The drums and orchestration weren’t generated by the algorithm, but added for emphasis after the fact.

It’s the first tangible product of Google’s Magenta program, which is designed to put Google’s machine learning systems to work creating art and music systems. The program was first announced at Moogfest last week.

Along with the melody, Google published a new blog post delving into Magenta’s goals, offering the most detail yet on Google’s artistic ambitions. In the long term, Magenta wants to advance the state of machine-generated art and build a community of artists around it — but in the short term, that means building generative systems that plug in to the tools artists are already working with. “We’ll start with audio and video support, tools for working with formats like MIDI, and platforms that help artists connect to machine learning models,” the team wrote in an announcement. “We want to make it super simple to play music along with a Magenta performance model.”

Read the full article: Google’s art machine just wrote its first song on The Verge

Also see:

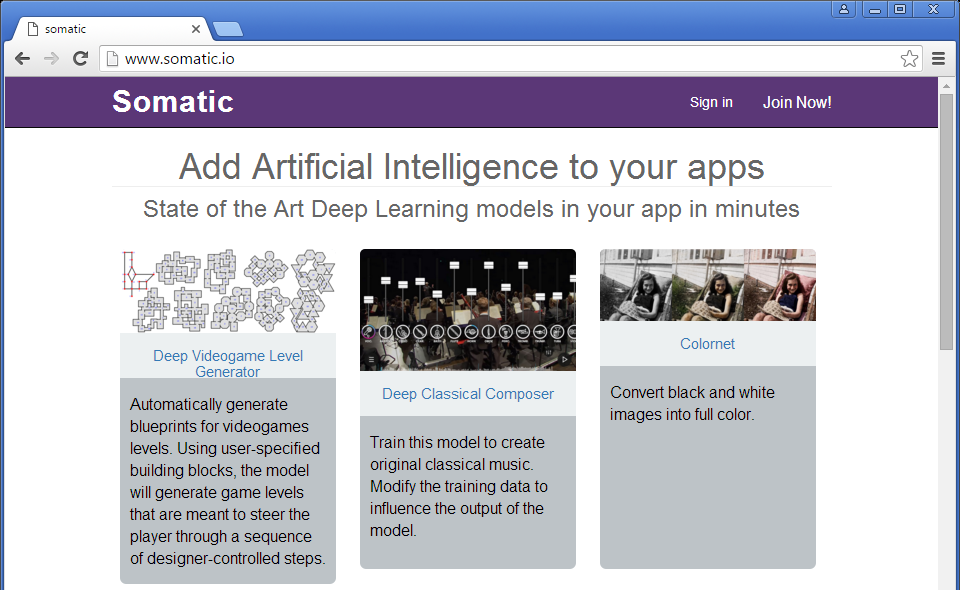

Link: http://www.somatic.io/

Pricing:

Currently 23 Models:

FC Layer in reference to Neural Networks in machine learning stands for:

Fully Connected Layer

Read more about Fully Conneted layers: http://cs231n.github.io/convolutional-networks/#fc

GRU in reference to Neural Networks in machine learning stands for:

Gated Recurrent Unit

Read more about GRU networks: http://arxiv.org/pdf/1412.3555v1.pdf

LSTM in reference to Neural Networks in machine learning stands for:

Long Short Term Memory

Read more about LSTM Networks: http://colah.github.io/posts/2015-08-Understanding-LSTMs/